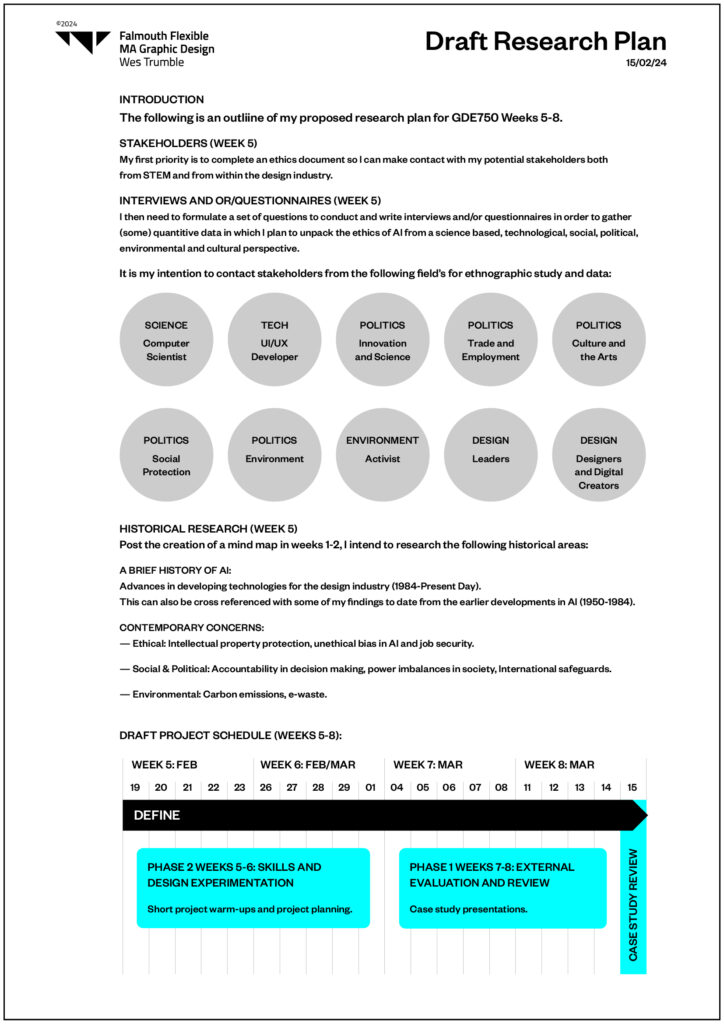

Development of key theme and areas of interest

NOTES FROM THE ETHICS HANDBOOK

1) Underpin all of its work with common values of rigour and integrity.

2) Conform to all ethical, legal and professional obligations incumbent on its work.

3) Nurture a research environment that supports research of the highest standards of rigour and integrity.

4) Use transparent, robust and fair processes to handle allegations of misconduct

5) Continue to monitor, and where necessary improve, the suitability and appropriateness of the mechanisms in place to provide assurances over the integrity of research.

6) Consider how your actions and decisions might affect people, values, and the environment.

All researchers (regardless of level or experience) need to adhere to a range of legal frameworks including; the Data Protection Act 1998 and General Data Protection Regulation 2016; the Equality Act 2010; the Children’s Act (1989 and subsequent); the Human Rights Act (1998); the Health and Safety at Work Act (1974); and the Mental Capacity Act (2005 and subsequent).

INFORMED CONSENT

1) Participants in research should give their informed consent prior to participation.

2) Consent should be obtained in writing.

3) You should make clear a participant’s right to refuse to participate in, or to withdraw from, the research at any stage.

4) For consent to be legally valid, there are three requirements:

— The potential participant must be competent, i.e. of adequate age and having the necessary mental capacity.

— The consent must be voluntary, i.e. the potential participant must be free from inducement, coercion or undue influence.

— Adequate and appropriate information must have been given to the potential participant.

Informed consent in research is a dynamic on-going process, not a one-off event, and may require renegotiation over time, depending on the nature and timescale of the project and the use and dissemination of any data.

The quality of the consent is affected by a number of factors, these being:

1) The format of the record of consent.

2 The competence and capacity of the subject/ participant to give consent

You should ensure that members of an institutionalised group (employees) understand that the institutional consent places them under no greater obligation to participate in the research. Avoid bias in participants’ responses, by not concealing or withholding particular information.

Consent and research in public and with groups:

1) Such research is only carried out in public contexts:

— Where possible approval is sought from relevant authorities.

— Appropriate individuals are informed that the research is taking place.

— No details that could identify specific individuals are given in any reports on the research unless reporting on public figures acting in their public capacity;

— Particular sensitivity is paid to local cultural values and to the possibility of being perceived as intruding upon or invading the privacy of people who, despite being in an open public space, may feel they are unobserved.

Ensure that members of a group understand they are being observed for research purposes.

DESIGNING CONSENT FORMS

An essential element of informed consent is telling participants clearly the following:

— The purpose of the research, expected duration, and procedures.

— What they are being asked to do

— Their right to decline to participate and to withdraw from the research once participation has begun.

— The foreseeable consequences of declining or withdrawing.

— Reasonably foreseeable factors that may be expected to influence their willingness to participate such as potential risks, discomfort, or adverse effects.

— Any prospective research benefits.

— Limits of confidentiality.

— Incentives for participation.

— Who to contact for questions about the research.

Include contact details for your module leader. This person must be fully informed of your research and aware that they are a named contact for participants. Other contact details may also be appropriate; for example, the site at which the participation activity is taking place (e.g. within a museum).

DATA PROTECTION AND SECURITY

Data protection is concerned with how data about individuals is obtained, processed and kept (stored, used and held), and the uses or disclosure of such information.

There is an obligation on the person or organisation holding the data to make sure it is kept securely to assure its use only for the purposes for which it was obtained, by those authorised to use it; that it is not kept longer than necessary; and that it is not passed on to third parties.

You must have a justifiable reason for collecting personal data. The most likely legal basis will lie in the researcher having obtained active, informed consent. It is essential that you tell data subjects what the data will be used for.

Ensure that personal data you hold is correct and not misleading as to any matter of fact. You must destroy or anonymise personal data once it has served its purpose. Data must be kept secure, not only from unauthorized access but also from accidental damage. If a data breach puts individual’s rights and freedoms at risk, you have 72 hours to notify the supervisory authority and provide relevant details. In the event of a data breech, you must contact FX Plus IT Services immediately. Data Subjects have the right to see all data that is held on them and can request this information by means of a Subject Access Request. Requests should be responded to within one month. Data Subjects have the right to right to rectify incorrect data held on them. Individuals can make a request for erasure verbally or in writing and you have one month to respond to a request. Where the accuracy of data is contested, Data Subjects have the right to request that data is not processed while the investigation takes place. Data Subjects have the right to request a machine-readable copy of personal data. Data Subjects have the right to object to the processing of their personal data in certain circumstances. Research participants must be told about their right to object

Keep a summary of the following:

1) Contact details, along with those of joint controllers.

2) The purpose of the processing.

3) The types of personal data being processed.

4) How long you are keeping the data.

5) The security measures that have been put in place.

CONDUCTING AN ETHICS REVIEW

Medium Risk describes research in which there is potential for harm or distress but where the likelihood is low and these risks can be mitigated with simple, standardised procedures.

Medium risk activities include:

— Research involving individuals or groups.

— Research involving access to records of personal or confidential information concerning identifiable individuals.

— Research involving the participation or observation of animals.

— Research involving interaction with individuals or communities where different cultural perceptions of ethics might result in misunderstandings

Medium risk categories

Will your project involve participants?

Yes / No

Will it be necessary for participants to take part in the study without their knowledge and consent at the time? (e.g. covert observation of people in non-public places)

Yes / No

Will financial inducements (other than reasonable expenses and compensation for time) be offered to participants?

Yes / No

Will your project involve collecting participant data (e.g. personal and/or sensitive data referring to a living individual)?

Yes / No

Will your project involve accessing secondary data that is not in the public domain (e.g. personal data collected by another user)?

Yes / No

Will your project involve accessing commercially sensitive information?

Yes / No

Could your project have negative environmental impacts (e.g. disturbance of natural habitats; damage to, or contamination of, buildings/artefacts/wildlife)

Yes / No

PARTICIPANT INFORMATION SHEET

A participant information sheet should allow a potential participant to decide whether or not they wish to take part in the research. It should provide clear information on the essential elements of the specific study: the topic being studied, the voluntary nature of involvement, what will happen during and after the study, the participant's responsibilities, and the potential risks or inconvenience balanced against any possible benefits.

Briefly describe your project in a way that will make sense to the participant – this will answer the question ‘why are you asking me to take part?’.

Secondly, you need to explain clearly what you are asking a participant to do, this answers the question ‘what are you asking me to do?’.

University Logo

University logos should be used on all public-facing documents

Document heading

We recommend the document is headed Participant Information and Consent Form.

Research title

One consistent title should appear on all the documents and be comprehensible to a lay person. Ask yourself: Does this explain the study in simple English?

Invitation paragraph

The invitation is to ask the potential participant to consider the study and then decide whether to take part. Both must be clearly explained. The following is an example:

I would like to invite you to take part in my research. Before you decide I would like you to understand why the research is being carried out and what it would involve for you. I will go through the information sheet with you and answer any questions you have.

Purpose of the research

Answer the question: What is the purpose of the study? Purpose is an important consideration for participants and we recommend that you present it clearly and succinctly.

Explain why you are inviting this participant to take part in your research.

Answer the question: Why have I been invited? You should explain briefly why and how the participant was chosen or recruited and how many others will be in the study.

Explain that taking part is optional

Answer the question: Do I have to take part? You should explain that taking part in the research is entirely voluntary. The following is an example:

It is up to you to decide to join the research. I will describe the study and go through this information sheet. If you agree to take part, I will then ask you to sign a consent form. You are free to withdraw at any time, without giving a reason.

Explain what the participant will be asked to do

Answer the two questions: What will happen to me if I take part? What will I have to do? To answer these questions, we suggest you try to “put yourself in the participant's shoes”.

This section should include:

— How long the participant will be involved in the research.

— How long the research will last (if this is different).

— How often the participant will need to meet with you.

— How long these meetings will be and where they will take place.

— What exactly will happen e.g. access to personal information, a questionnaire, interview, discussion group, an activity, etc.

Set down briefly and clearly what you will expect from your participants.

Use the most appropriate format (e.g. tables, diagrams, photos, etc.) and not necessarily just words. The detail required will depend on the complexity of the study and who you are communicating with and the context in which you are approaching them.

You should inform the participant if your study will involve video/audio- taping or photography. Specific consent will be needed if you will publish material that identifies a participant.

Consider each type of participation separately

Many research projects involve more than one type of participation. For example, you may be conducting a series of interviews with experts in a field (e.g. exhibition curators) and you may be running workshops (e.g. for visitors to exhibitions). These participant communities are different and their experience of engagement with the research will be different. It follows, therefore, that you need to provide different participant information and consent forms tailored for each type of participant.

Expenses and payments (Not generally applicable to student research projects)

You should explain if expenses (e.g. travel, meals, child-care, compensation for loss of earnings, etc.) are available and you should consider whether anything that you are intending to give as a 'thank-you' for participation, should be detailed in the information sheet. The arrangements for any other payment should be given.

What are the possible disadvantages and risks of taking part?

Any risks, discomfort or inconvenience to the participant should be outlined. You should consider insurance issues and explain any implications in the information you supply.

What are the possible benefits of taking part?

Explain these, but it is important not to exaggerate any possible benefits to the participants themselves. You ought to consider how you can give them access to your findings, if that is appropriate and they are interested.

What happens when the research ends?

Will there be any further contact with the participant? If so, explain what this will be.

Explain how a participant can withdraw from the study

Answer the question: What will happen if I don't want to carry on with the study?

Explain what the subject can and can't expect if they withdraw. It may not be possible or desirable for data to be extracted and destroyed. The position on retention/destruction of data/artefacts on withdrawal must be made clear so that the participant can make an informed decision about whether or not to take part in the first place.

What if there is a problem?

You should inform participants who to contact if they have questions or concerns about your project. A participant may want to contact you or the University. To accommodate either situation we recommend that the participant information includes information on how to contact the researcher (use only institutional contact information, not personal contact information such as personal telephone number or home address, e.g. give your University email address), and also information on how to contact your module tutor (or other designated Department contact).

Participant confidentiality

Answer the question: Will my taking part in this study be kept confidential?

You should tell the participant how their confidentiality will be safeguarded during and after the study. You may wish to tell the participants how your procedures for handling, processing, storage and destruction of their data match the appropriate legislation.

The participant should be told how their data will be collected; that it will be stored securely, giving the custodian and level of identifiability (e.g. whether it will be anonymised during storage, etc.); and what it will be used for. It must be made clear whether the data is to be retained for use in future studies and whether further Research Ethics approval will be sought; who will have access to identifiable data; how long it will be retained, and that it will be disposed of securely. Consider what your research requires – for example do not say data will be anonymised if it is critical to the research that data can be attributed to sources. Participants have the right to check the accuracy of data held about them and correct any errors.

What will happen to the results of the research study?

Participants often want to know the results of research they have taken part in. You should tell participants what will happen to the results of the research, whether and how it is intended to make public (e.g. publish, exhibit, broadcast) the results and how the results will be made available to participants. You should add that participants will not be identified in any report/publication unless they have given their consent.

Keeping a record of participant consent

Participants should be provided with their own copies of the participant information, which should be dated. It is easiest to maintain a record of participant consent if you append the consent items to the participant information document. The example of the form of the consent record given below will be suitable for many studies, and may be attached to, or be part of, the participant information sheet. The participant is consenting to everything described in the text of the information sheet.

For some studies a fuller, itemized, or hierarchical consent form may be needed to cover important issues, especially if additional elements are optional for the participant. These may include: consent to use of audio/video-taping, with possible use of verbatim quotation or use of photographs; and transfer of sensitive personal data to countries with less data protection.

The signatories to the consent should be those who are involved in the consent process, e.g. the participant and the researcher. An independent witness is not routinely required except in the case of consent by a participant who is blind, illiterate, etc.

Contact Details

Always provide University contact details on an information form. Do not use personal contact details.

Participant consent form

Once you have set out clearly the information to inform your participant, the consent record is fairly simple. We recommend that there are two copies: 1 for participant; 1 for researcher's file. Below we show typical content for this that you can customize to suit your particular project.

University Logo

Title of Project

Name of Researcher

Please initial box

I confirm that I have read and understand the information provided above dated………..(version…..) for the research study. I have had the opportunity to consider the information, ask questions and I have had these answered satisfactorily.

I understand that my participation is voluntary and that I am free to withdraw at any time without giving any reason, without my legal rights being affected.

I agree to take part in the above study.

Include:

Name of Participant, Date, Participant Signature

Name of Person taking consent (usually the researcher), Date, Signature.

Finally, include information on how to contact your module tutor (or other designated Department contact).

Implicit Consent

In some situations, it may be appropriate to provide participant information without requiring a consent form which identifies the participant. An example might be if you are asking someone to answer a few questions anonymously at an exhibition. As long as you make it clear that participation is voluntary, agreeing to answer questions anonymously (verbally or by completing a questionnaire) can be regarded as implying consent to participate. However, the participant information provided should still meet the standards set out in the sections above.

Apply for research ethics approval (taught courses): Dan Brackenbury.

—

RESEARCH

WHAT IS AI?

AI = The ability of computers and systems to perform tasks that typically require human cognition.7

A BRIEF HISTORY OF AI

To our contemporary selves we think of the introduction of AI in daily practice as a relatively new concept made real. For most of us it entered our lives unassumingly as set of coded algorithms employed to activate chatbots or for empowering tools to expedite tasks. Today, It poses questions on social, political, environmental and cultural levels and has impacts for all of us in working society.

Strikingly we can find references to AI beginning in antiquity within Greek mythology with the introduction of Talos; an automaton gifted to Minos by Hephaestus, the god of fire.3 Over the centuries since these myths were founded a long tradition evolved within popular myth, culture and science fiction in which Artificial Intelligence has always been a constant. From the Brazen Head's attributed to the late medieval scholar Roger Bacon,3 to the more recent interpretations such as Spencer Brown and Sarah Govat's T.I.M. (Altitude/Stigma Films)4 AI it seems has always been present in the human minds. But who were the real protagonists behind AI? and how did we get to where we are today?

—

"The time will come when the machines will hold the real supremacy over the world and its inhabitants is what no person of a truly philosophic mind can for a moment question."5

"There is no security… against the ultimate development of mechanical consciousness… reflect upon the extraordinary advance which machines have made during the last few hundred years, and note how slowly the animal and vegetable kingdoms are advancing."6

Samuel Butler (Cellarius).

—

BEGINNINGS

In the 1940s and 50s, a handful of scientists from a variety of fields (mathematics, psychology, engineering, economics and political science) began to discuss the possibility of creating an artificial brain initially as a reaction to the existential threat of the atomic age. Alan Turing was the first person to carry out substantial research in the field that he called Machine Intelligence.3

ALAN TURIN

in 1950 Englishman Alan Turing wrote an academic paper entitled 'Computing Machinery and Intelligence' proposing the question as to whether or not machines could think?" The premise of his proposal was to create a triangular game with three participants: C) an interrogator asking the questions, A) a man acting as a disruptor in the process by answering both falsely and truthfully and B) a woman answering the interrogator's questions truthfully. A simple question was posed by the interrogator to determine which of the parties A) or B) was a man or a woman. The interrogator knew the parties as X and Y and it was his task to determine whether X = A the man and Y = B the woman or X = B the woman and Y = the man. The man's goal in the process was to cause the interrogator to make the wrong identification. The interrogator was isolated from the others and questions and answers (what we now call prompts) would be sent between rooms via a teleprinter. This eliminated any interference in the process from the tone of voice of the participants. The resulting data could then be gathered to determine the number of times the interrogator answered incorrectly. He then proposed that if A) was replaced by a machine would the interrogator decide wrongly as often as when the game was played between a man and a woman leading to his initial question 'can machines think? He called this process 'The imitation game'.1 Alan's 'Imitation Game' became known as the Turin Test whereby a machine was said to think if an interrogator could not tell it apart, through conversation, from a human being.2

At first sight what is prescient about this paper is the similarity between the process we are employ in our our interaction with what are still essentially machines, albeit that the parties are reduced to two people with you as interrogator 'prompting' and the 'machine' as the knowledge base. The separation between machine and interrogator is still present in our contemporary process however I do not believe we feel this in our present day interactions with technology due to familiarity. I.E: for us today it is all happening in real time in our physical place of work or study.

In critiquing his own process Turing rejects the idea of the physical machine as robot, android or cyborg while at the same time speculates on a future where "a material which is indistinguishable from the human skin" could be possible [2. Critique of the New Problem].1 Instead he envisions a digital computer in which questions can be asked in succession to determine mathematical answers.1

What Turin does predict is that the "manner of operation cannot be satisfactorily described by its constructors because they have applied a method which is largely experimental [3. The Machines Concerned in the Game] which is one of the existential threats that modern programmers of AI are faced with in not always understanding how the AI is working to determine answers and poses the question 'has AI reached sentience ?'.1 His paper is a layered, detailed analysis of this initial question and reveals as much about his thought processes and scientific interest as it does about AI itself. I.E. in section 3. 'The Machines Concerned in the Game' he poses that "it is probably

possible to rear a complete individual from a single cell of the skin" which predicts the breakthroughs we see in stem cell research today.1

He saw the relationship between man and machine as one of equals in that the data programmed into and stored by the machine would 'mimic' human knowledge. He proposed that a coded table of instructions or book of rules would represent the content stored and that this data would then be filtered by an inbuilt 'control' to ensure the information output would be accurate. In section 4. 'Digital Computers' he asserts that each machine "must be programmed afresh for each new machine which it is desired to mimic". If we take machine to mean AI in this context his visionary approach rings true today in the various ways AI is programmed and employed to conduct different tasks whether it be for writing, image making or layout creation or for more advanced technological and scientific practice.1 Turin himself believed that the answers obtained from machines would not always be correct underlining this as comparative to human knowledge in that no one man would have all the answers either. His point was in fact that in building and programming a digital machine that could play the 'game' of mimicry that responded to prompts "there might be men cleverer than any given machine, but then again there might be other machines cleverer again". However, he also envisaged that machine's could learn and continue to do so "a machine undoubtedly can be its own subject matter. It may be used to help in making up its own programmes, or to predict the effect of alterations in its own structure" [5. Arguments from Various Disabilities].1 He also argued that "Instead of trying to produce a programme to simulate the adult mind, why not rather… the child's? … subjected to an appropriate course of education one would obtain the adult brain." [7. Learning Machines].1 This foresight in the programming and training of AI, especially in the field of robotics has helped in the development of both machine learning and neural networks that are analytical but not yet sentient.

Turin could see the possibilities in generative machine learning whilst being frustratingly aware that he was thinking 50 years into the future with computers of the day lacking memory, power and speed. He concluded his paper with "We can only see a short distance ahead, but we can see plenty there that needs to be done."1

…

THE DEVELOPMENT AND NAMING OF AI

In the years that followed the Turin Test mathematicians, cognitive and computational scientists, psychologists and neuroscientists collaborated towards the development an electronic brain as a reaction to the existential threats posed by the atomic age. In 1951, with new insights gained into how the human brains neurological synapses work, American computer and cognitive scientists Marvin Minksy and Dean Edmonds realised the first artificial neural network or ANN.7 This was based on research and mathematical prototyping conducted in 1943 by American Logician and computational neuroscientist Walter Pitts and neurophysiologist and cybernetician Warren McCullogh in their analysis of artificial neurons which were used to conduct simple logical functions.3 Their work was influenced by an earlier paper published by Turin "On Computable Numbers, with an Application to the Entscheidungsproblem" which was a response to the premise by the German mathematician David Hilbert that "some purely mathematical yes–no questions can never be answered by computation".8 Named the SNARC (Stochastic Neural Analog Reinforcement Calculator) their 'brain' was built with 40 memory banks or synapses that were able to respond to simple inputs. Working much in the same way a real brain does the memory banks would sometimes capture inputs and sometimes not. However, the brain could only remember data for a limited amount of time. If the brain was able to capture inputs it would output a simple answer to the input data. This machine was considered pioneering in proving the concept of machine intelligence.9 In 1952 this research was built upon with the development of a checkers playing program by computer scientist Arthur Samuel that displayed machine learning. The machine could pinpoint any given checker position on the board and calculate moves that would lead to winning the game.10 The program was among the world's first successful self-learning programs (3) and Samuel went on to coin the term 'machine learning' in 1959.7 The evolution of artificial neural networks was further developed between 1958-1962 with the building of Perceptron machines by American Psychologist Frank Rosenblaat which were designed to learn by practice. Rosenblatt is widely considered to be the father of deep learning.11

In 1956 during the Dartmouth Workshop, a pivotal event that recognised machine learning as an accepted academic discipline, the American computer and cognitive scientist John McCarthy coined the term Artificial Intelligence. He proposed this rebrand from machine learning to AI to avoid any connection with cybernetics. This workshop was formulated to unpack the possibilities of human intelligence simulated by machines marking a focused exploration in the field of AI. They posed the premise that "every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it". This event is widely considered to be the birth of AI.3

In 1958 McCarthy went on to develop the programming language Lisp which gained enormous popularity among AI developers.

RISES AND FALLS IN AI ADVANCEMENT

In the 1960's research and development continued apace in the fields of language processing (Daniel Bobrow/Student 1964), organic molecular identification (Edward Feigenbaum, Bruce G. Buchanan, Joshua Lederberg and Carl Djerassi/Dendral 1965), chatterbots (Joseph Weizenbaum/Eliza 1966), robotics (Stanford Research Institute/Shakey 1966) and deep learning algorithms (Arthur Bryson and Henry J. Kelley/Backpropagation 1969). At the end of the decade Marvin Minsky and Seymour Papert published the book Perceptrons, which described the limitations of simple neural networks and caused neural network research to decline. This combined with James Lighthill's report "Artificial Intelligence: A General Survey," lead to a slow decline in further research and development in the field of AI.7 This period between 1974 and 1980 was termed the first AI winter which was coined by Marvin Minsky and Roger Shank in 1984 at a meeting of the Association for the Advancement of Artificial Intelligence. The AI winter was partly due to a lack of useable outcomes from the work conducted up to this date, technological limitations and a lack of funding.7

By the dawn of the 1980's AI "expert systems" programs were beginning to be adopted globally. The earliest example of expert systems programs in use was to identify organic molecular compounds using a spectrometer (Edward Feigenbaum, Bruce G. Buchanan, Joshua Lederberg and Carl Djerassi/Dendral 1965). This was considered to be a small domain of specific knowledge. To realise expert programs full potential, a shift away from specific knowledge domains needed to be adopted with the focus for AI research directed towards unpacking 'commonsense knowledge'. The switch to expert systems, with their in-built flexibility, proved useful. This was later verified with the success in 1980 of an expert system called XCON (or R1) which with 95-98% accuracy saved the Digital Equipment Corporation over 40 million dollars annually. In the shadow of this success global corporations began to follow suit.3 By 1985 corporations were spending billions on in-house AI systems and software and hardware industries grew out of a need for technical support.

Advancements in AI during this period (1981-1987) extended across multi-faceted fields of expertise. Supercomputers were used for exploratory research in the fields of astrophysics, aircraft design, financial analysis, genetics, computer graphics, medical imaging, image understanding, neurobiology, materials science, cryptography, and subatomic physics (Danny Hillis, American inventor, entrepreneur, and computer scientist/Parallel Computers 1981.13 Programs were developed to analyse and give viable answers to probable causes and effects (Israeli-American computer scientist and philosopher Judea Pearl/Bayesian Networks 1985).14 Unfortunately, AI's demonstrative practical application within the Business sector was seen as not viable. This, combined with an economic downturn with businesses failing, interest in the technology began to wane and AI entered its second winter. Apple and IBM's entry into the market with the development of desktop computing also affected progress within the AI computing community. Funding was cut and an industry worth half a billion dollars collapsed overnight. Funds had been redirected towards projects that were more likely to produce immediate results. This however did not deter progress with numerous researchers calling for a new approach to AI and its practical application in the field of robotics.3 Academic advances were also made in the field of language translation (A Statistical Approach to Machine Translation/Peter Brown et, al 1988)7 and in 1989 a team of computer scientists demonstrated how neural networks could be used to identify handwritten characters, demonstrating a practical application to real-world problems (Convolutional Neural Networks/ French-American computer scientist Yann LeCun, Canadian computer scientist Yoshua Bengio and Applied Scientist Patrick Haffner 1989).7

AI'S RENAISSANCE

By the early nineties due to advances in increased computing power and a shift in focus towards specific isolated problems lead AI researchers, working incognito, separated their fields of practice into specialised groups. Applying the highest standards of scientific accountability AI was both more cautious and more successful than it had ever been in reaching its oldest goals— to create generative AI that could match and even outshine human intelligence.3 Advances were made in data processing data including speech and video (Recurrent Neural Networks/German computer scientists Sepp Hochreiter and Jürgen Schmidhuber 1997) and in the same year Russian Chess Grandmaster Garry Kasparov was beaten by the super computer Deep Blue (Deep Blue/IBM 1997).7 The first image data mining program was also developed during this renaissance period enabling AI to decipher and define what it was looking at (ImageNet/Chinese-American Computer Scientist Fei-Fei Li 2006) and in the same year IBM's Watson beat 'Jeopardy's' all time champion, Ken Jennings.7

Over the next 10 year period the race was on to aid both STEM research and the creation of human like AI was drawing closer.

…

SOURCES

1) A. M. Turing (1950) Computing Machinery and Intelligence. Mind 49: 433-460.

2) Harnad, Stevan (2008) The Annotation Game: On Turing (1950) on Computing, Machinery, and Intelligence (PUBLISHED VERSION BOWDLERIZED) In, Epstein, Robert, Roberts, Gary and Beber, Grace (eds.) Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer. Evolving Consciousness Springer pp. 23-66.

3) https://en.wikipedia.org/wiki/History_of_artificial_intelligence

4) https://en.wikipedia.org/wiki/T.I.M._(film)

5) 'Darwin among the Machines', Samuel Butler (Cellarius), The Press, 13/06/1863, Christchurch, New Zealand.

6) https://en.wikipedia.org/wiki/Darwin_among_the_Machines

7) https://www.techtarget.com/searchenterpriseai/tip/The-history-of-artificial-intelligence-Complete-AI-timeline

8) https://en.wikipedia.org/wiki/Turing%27s_proof

9) https://en.wikipedia.org/wiki/Stochastic_Neural_Analog_Reinforcement_Calculator

10) https://en.wikipedia.org/wiki/Arthur_Samuel_(computer_scientist)

11) https://en.wikipedia.org/wiki/Frank_Rosenblatt

12) https://en.wikipedia.org/wiki/Commonsense_knowledge_(artificial_intelligence)

13) https://en.wikipedia.org/wiki/Danny_Hillis

14) https://en.wikipedia.org/wiki/Judea_Pearl

Note: After a webinar with Dan Brakenbury I have realised that I have unfortunately taken the wrong route for my research. In the following proposal I hope to make my direction clearer and relate all research back to my question.

—

Written Proposal, Research Plan & Ethics Review